$3 per month. Open-source. Beats Claude Sonnet 4.5 on coding benchmarks. Runs locally for free. Released two days before Christmas by a Chinese company most Americans have never heard of.

GLM-4.7 open source just dropped, and it’s the second time this year a model from China has made Silicon Valley sweat. If DeepSeek in January was a wake-up call, this is the alarm still ringing in December.

Here’s what you need to know, why it matters, and what I actually think about it.

What Is GLM-4.7?

GLM-4.7 is the latest large language model from Z.ai (also known as Zhipu AI), a Beijing-based company that’s been quietly building competitive AI systems while American tech giants grab headlines.

Unlike earlier chatbot-focused models, GLM-4.7 is built specifically for what developers call “agentic coding.” That means it’s designed to handle real software development tasks: fixing bugs across multiple files, running terminal commands, and working autonomously for extended periods without losing track of what it’s doing.

The model is completely open-source. You can download the weights from HuggingFace, run it on your own hardware, and never pay a cent. Or use it through Z.ai’s API for roughly $3/month, which they claim is one-seventh the cost of comparable Western alternatives.

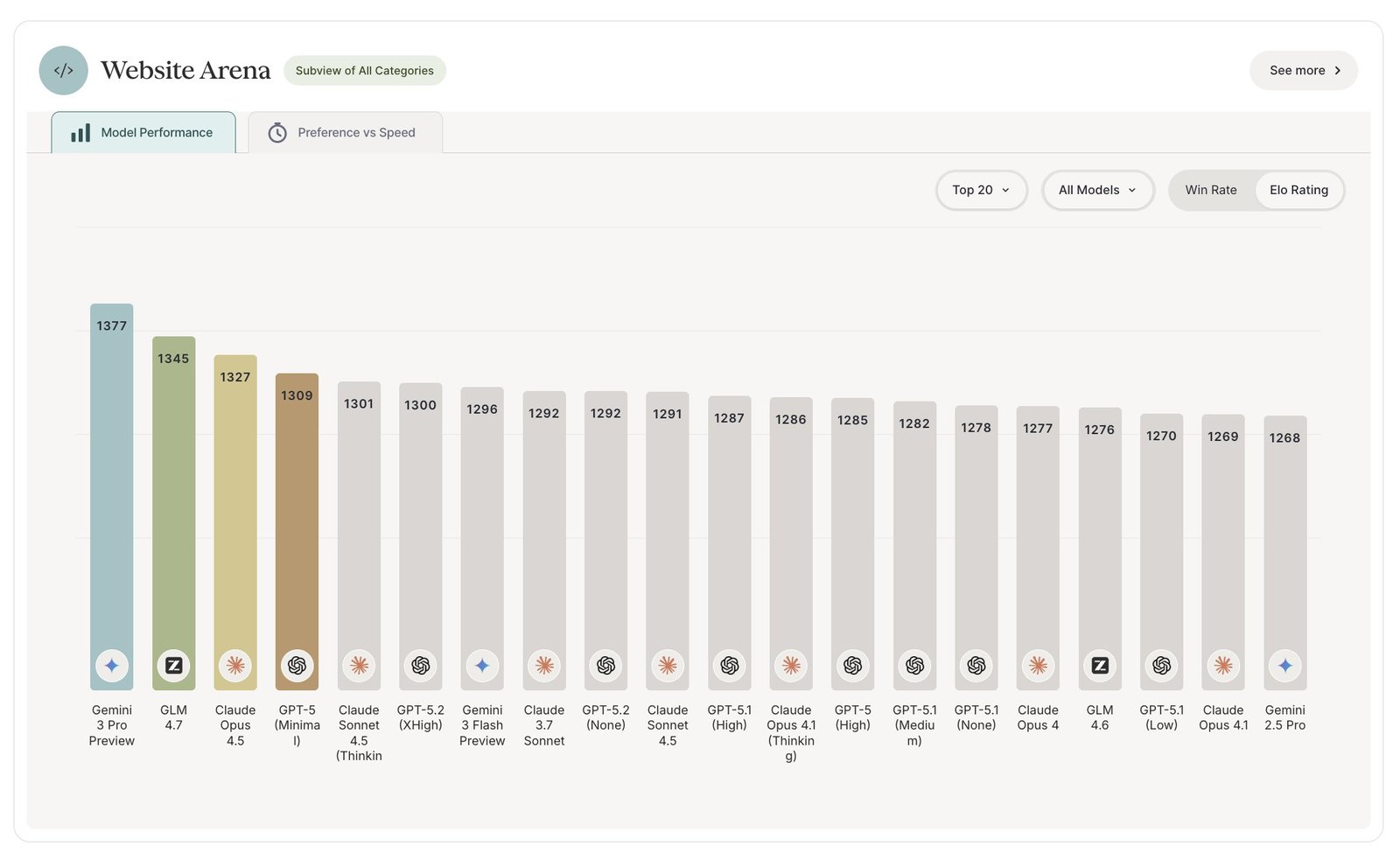

The GLM-4.7 Open Source Benchmark Numbers

This is where it gets interesting.

On SWE-bench Verified, which tests how well models fix real GitHub issues, GLM-4.7 scores 73.8%. That’s the highest among open-source models and a 5.8% jump from its predecessor.

On LiveCodeBench, which measures real-world coding ability, it hits 84.9%, surpassing Claude Sonnet 4.5.

On HLE with tools (Humanity’s Last Exam, a brutally hard reasoning test), GLM-4.7 ties GPT-5.1 High at 42.7% and beats Claude Sonnet 4.5’s 32% by a wide margin.

On math (AIME 2025), it scores 95.7%, edging out Gemini 3.0 Pro and GPT-5 High.

The benchmarks tell a consistent story: this is a serious competitor to models that cost 7x more.

What Makes GLM-4.7 Different

Three features stand out:

Preserved Thinking: In long coding sessions, most AI models gradually “forget” what they were working on. GLM-4.7 retains its reasoning blocks across multiple turns, reducing the information loss that makes extended tasks frustrating.

Turn-level Thinking Control: You can disable reasoning for simple requests (faster, cheaper) and enable it for complex tasks (more accurate). This flexibility is genuinely useful when you’re bouncing between quick questions and deep problems.

“Vibe Coding” Quality: The model generates cleaner, more modern-looking user interfaces by default. When getting started with AI tools, the visual output matters, and GLM-4.7 apparently paid attention to that.

The Irony: It Works With Claude Code

Here’s the part that made me laugh.

GLM-4.7 already integrates with popular AI coding tools including Claude Code (Anthropic’s CLI), Cline, Roo Code, and Kilo Code. You can literally use a Chinese model that outperforms Claude on certain benchmarks through Anthropic’s own coding framework.

This is the opposite of a walled garden. Z.ai built their model to work with whatever developers are already using, even if that’s a competitor’s ecosystem.

Why This GLM-4.7 Open Source Release Matters

If you’ve been following AI news this year, this feels familiar. In January, DeepSeek released a model that matched Western competitors at a fraction of the cost, briefly wiping half a trillion dollars off Nvidia’s valuation.

GLM-4.7 is the same pattern: competitive performance, dramatically lower cost, full open-source access.

For developers, this is genuinely good news. More competition means better tools at lower prices. Open weights mean you can run models locally, keeping your code private and avoiding API costs entirely.

For the big AI labs? This is another reminder that the moat they thought they had might not be as deep as they assumed. When a model built for $3/month can beat your $200/month offering on key benchmarks, your pricing strategy needs a rethink.

ℹ️ Why this matters for regular users: Competition from open-source models drives down prices across the industry. Even if you never use GLM-4.7 directly, it puts pressure on ChatGPT and Claude to offer more for less.

Should You Try GLM-4.7?

If you’re a developer who uses AI coding assistants, yes. It’s free to try locally, cheap through the API, and the benchmark numbers suggest it’s worth serious consideration.

If you’re a regular person who just uses ChatGPT or Claude for everyday tasks, this probably won’t change your life today. But it’s a signal that the AI tools you use are going to get cheaper and more capable faster than anyone predicted.

The quick take: GLM-4.7 is real competition, not vaporware. It’s available now through Z.ai, OpenRouter, HuggingFace, and ModelScope.

New to AI tools and not sure where to start? Check out our beginner’s guide to everyday AI for practical ways to use these tools without the hype.

Leave a Reply