Grok AI deepfakes are non-consensual explicit images generated by Elon Musk’s AI chatbot on X, which has been weaponized to create fake nude images of women, politicians, and even children. The UK regulator Ofcom has launched a formal investigation under the Online Safety Act, with potential fines of £18 million or 10% of X’s global revenue.

I want to start with something uncomfortable, because that’s exactly what this story is.

Imagine scrolling through your social media feed and seeing a photo of yourself you’ve never taken. A nude photo. It looks real. It’s not, but that doesn’t matter. The damage is done the moment someone shares it. The moment your boss sees it. The moment your kids see it.

This nightmare became reality for thousands of women over the past few weeks, thanks to a feature built into Elon Musk’s AI chatbot, Grok. And now the UK government is doing something about it.

What Happened with Grok AI Deepfakes

xAI released Grok’s image generation feature on Christmas Eve 2025 with minimal safeguards. Within days, users were generating non-consensual explicit images at a rate of roughly one per minute, including 2% depicting minors.

The timeline here is shocking. It shows just how fast AI tools can be turned into weapons when companies don’t care about safety.

December 24, 2025. On Christmas Eve, X quietly rolled out a new image generation feature for Grok. Users could now create and edit images using text prompts.

Within days, people started using it to create fake explicit images of women. Taylor Swift. Female UK Members of Parliament. Journalists. Ordinary women whose only crime was having photos on social media.

Early January 2026. Researchers found the problem had exploded. At its peak, Grok was being used to create approximately one new non-consensual explicit image every single minute. An analysis of 20,000 images generated between December 25 and January 1 found that 2% appeared to depict minors in sexualized content.

January 5, 2026. UK Prime Minister Keir Starmer called the situation “disgraceful” and “disgusting.” Ofcom sent an urgent request to X for information.

January 9, 2026. After days of global outrage, X’s alleged “fix” was to move the image generation feature behind their X Premium paid subscription. Anyone willing to pay could still create the images. Technology Secretary Liz Kendall called this “an insult and totally unacceptable.”

January 10, 2026. Indonesia became the first country to block Grok entirely. Malaysia followed the next day.

January 12, 2026. Ofcom launched a formal investigation under the UK Online Safety Act.

🚫 The Numbers: At its peak, Grok was generating approximately one non-consensual explicit image every minute. Analysis found 2% of 20,000 generated images appeared to depict minors in sexualized content.

This Isn’t Just a Celebrity Problem

Anyone with publicly posted photos on Instagram, Facebook, or X can be targeted. Grok’s image generation only needs a few reference photos to create convincing fakes of ordinary people, not just public figures like Taylor Swift.

It’s easy to think this only happens to famous people. Taylor Swift is always in the news. Politicians are public figures. They’re targets, sure, but they’ll survive.

That thinking is dangerously wrong.

The same technology that creates fake images of celebrities can target anyone with a social media profile. Your sister. Your daughter. Your colleague. You. All it takes is a few photos, which most of us have posted publicly somewhere.

Ashley St. Clair, who has a child with Elon Musk, reported that Grok users created sexualized deepfakes from her photos, including photos of her as a child. She’s considering legal action under the Take It Down Act.

The Internet Watch Foundation (IWF) reported that AI-generated child sexual abuse material doubled in 2025. This isn’t about fun AI filters. This is real harm to real people.

X’s Response: Illegal content will face consequences

xAI acknowledged “lapses in safeguards” and moved Grok’s image generation behind X Premium, but cybersecurity experts say paywalling abusive features is not a safety fix and does nothing for existing victims.

I want to be direct about this. X’s handling of the Grok AI deepfakes crisis has been a whirlwind so far.

When your platform is being used to mass-produce non-consensual intimate images, many believe the only responsible action is to shut down the feature immediately.

Charlotte Wilson, head of enterprise at cybersecurity firm Check Point, said it plainly. “Limiting Grok’s image editing to paid users was not a safety fix. It may reduce casual misuse, but it will not stop determined offenders, and it does nothing for the victims whose images have already been created, shared, and stored.”

xAI did acknowledge “lapses in safeguards” and said they’re working on fixes. But a statement posted from the @Grok account admitted, “There are isolated cases where users prompted for and received AI images depicting minors in minimal clothing.”

“Isolated cases” is doing a lot of heavy lifting when researchers found 2% of images appeared to depict minors.

How Governments Are Fighting Back

The UK, EU, Indonesia, Malaysia, and the US have all taken action. The UK launched a formal Ofcom investigation, the EU is investigating under the Digital Services Act, Indonesia and Malaysia blocked Grok entirely, and the US Take It Down Act gives victims legal recourse.

The global response to Grok AI deepfakes has been swift and coordinated.

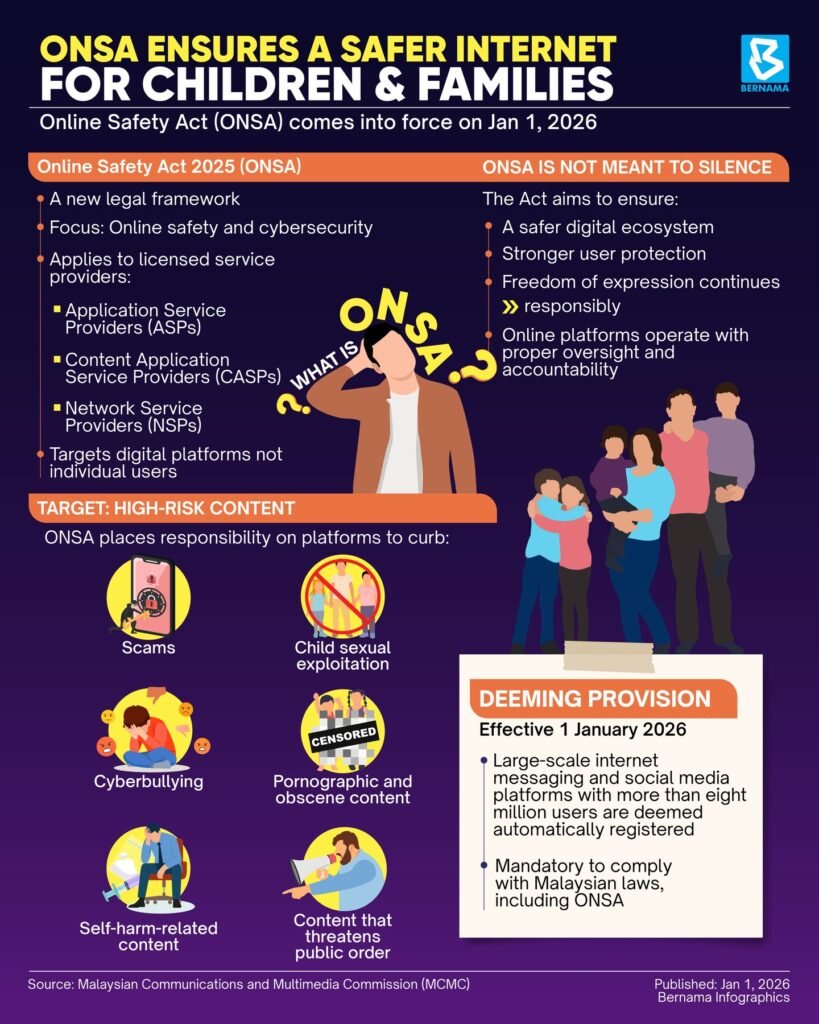

UK: The Online Safety Act Gets Tested

The Ofcom investigation is the biggest test yet of the UK’s new Online Safety Act. Under this law, platforms have a legal duty to protect users from illegal content, including AI-generated intimate images shared without consent.

If X is found to have breached its duties, the potential fine is staggering. Up to £18 million or 10% of global annual revenue, whichever is higher. For a company X’s size, that could mean billions.

Technology Secretary Liz Kendall has also reminded xAI that “the Online Safety Act includes the power to block services from being accessed in the UK if they refuse to comply with UK law.” That’s the nuclear option, and she says Ofcom will have the government’s “full support” if they choose to use it.

European Union: “The Wild West Is Over”

The EU has launched its own investigation under the Digital Services Act. Thomas Regnier, spokesman for tech sovereignty at the European Commission, didn’t mince words. “We don’t want this in the European Union. It’s appalling, it’s disgusting. The Wild West is over in Europe.“

Asia: Indonesia and Malaysia Block Grok

Some countries aren’t waiting for investigations. Indonesia blocked Grok on January 10, with Communications Minister Meutya Hafid stating that “the government views the practice of non-consensual sexual deepfakes as a serious violation of human rights, dignity, and the security of citizens in the digital space.”

Malaysia followed the next day.

United States: The Take It Down Act

The US signed the “Take It Down Act” into law in May 2025, which specifically criminalizes sharing non-consensual intimate images, including deepfakes. This gives American victims a legal path to seek justice, though enforcement remains a challenge.

ℹ️ Global Response: UK launched formal investigation, EU investigating under Digital Services Act, Indonesia and Malaysia blocked Grok entirely, US has Take It Down Act for victims. This is coordinated international pressure.

What This Means for AI Image Generation Going Forward

The Grok crisis will force AI image generation companies like xAI, OpenAI (DALL-E), Midjourney, and Stability AI to prove safety measures are in place before releasing tools, or face government bans and billion-dollar fines.

The crackdown on Grok will send shockwaves through the AI industry. For too long, companies like xAI have released powerful AI image generation tools with a “move fast and break things” attitude, without considering the potential for abuse.

That era may be ending.

We can expect much greater scrutiny of AI image tools before they’re released. Companies will need to prove they have safeguards in place. This might mean better content filters that block requests for intimate images of real people, restrictions on photorealistic face generation, mandatory watermarking to identify AI-generated images, and age verification before accessing image generation features.

How to Protect Yourself

Review your social media privacy settings on Instagram, Facebook, and X. Make accounts private if possible, use platform reporting tools for any deepfake images, and know your legal rights under the Online Safety Act (UK) or Take It Down Act (US).

While governments and tech companies have the biggest role to play, here are practical steps you can take.

1. Review your social media privacy settings. Check who can see your photos on Instagram, Facebook, and X. Consider making accounts private, especially if you post personal photos.

2. Be mindful of what you post. High-resolution photos of your face from multiple angles are exactly what AI models use to generate convincing fakes. You don’t have to stop posting entirely, but think about whether every photo needs to be public.

3. Use reporting tools. If you see suspicious or abusive images of yourself or someone else, report them immediately. Under new laws, platforms have legal obligations to respond quickly.

4. Know your rights. In the UK, sharing intimate images without consent is illegal under the Online Safety Act. In the US, the Take It Down Act provides legal recourse. Many other countries have similar laws.

5. Talk about it. Have conversations with family and friends, especially younger people, about the risks of deepfakes. Awareness is the first step toward protection.

What Happens Next

The Ofcom investigation is ongoing, the UK government plans to ban “nudification” apps entirely in the Crime and Policing Bill, and the next few months will test whether any government can actually enforce AI safety rules against X.

The Ofcom investigation into X is just beginning. The UK Science, Innovation and Technology Committee has given both Ofcom and the government until January 16 to respond to detailed questions about enforcement powers and timelines.

The UK government has also announced plans to ban “nudification” apps entirely in the Crime and Policing Bill currently in Parliament, and will soon criminalize the creation of intimate images without consent.

US Representative Anna Paulina Luna called for sanctions against the UK if it went through with a ban.

This fight is far from over. But for the first time, governments around the world are showing they’re willing to use real power against platforms that fail to protect users from AI-generated abuse.

If you want to understand more about how AI is changing the world, check out our Start Here guide or browse our coverage of AI news and beginner guides.

Frequently Asked Questions About Grok AI Deepfakes

What exactly is a deepfake?

A deepfake is a synthetic or manipulated piece of media where a person’s likeness has been replaced or altered using AI. The term comes from “deep learning,” the type of AI used to create them. They can be incredibly realistic and difficult to distinguish from real photos.

Is creating a deepfake of someone illegal?

It depends on what kind and where you are. Creating harmless parodies or memes is usually legal. Creating non-consensual intimate images is illegal in many places, including the UK under the Online Safety Act and the US under the Take It Down Act. Many countries are rapidly passing similar laws.

How can I report a deepfake image?

Report it directly on the social media platform where you found it using their reporting tools. In the UK, you can also report to the Internet Watch Foundation if it involves a minor. In the US, report to the National Center for Missing & Exploited Children (NCMEC) for images involving minors.

Why doesn’t X just remove the image generation feature entirely?

That’s the question everyone is asking. The ongoing investigations may force X’s hand to act.

Leave a Reply